Overview

Mobilint NPU – LLM Inference Demo Container

About Advantech Container Catalog (ACC)

Advantech Container Catalog is a comprehensive collection of ready-to-use, containerized software packages designed to accelerate the development and deployment of Edge AI applications. By offering pre-integrated solutions optimized for embedded hardware, ACC simplifies the challenge of software-hardware compatibility, especially in GPU/NPU-accelerated environments.

| Feature / Benefit | Description |

|---|---|

| Accelerated Edge AI Development | Ready-to-use containerized solutions for faster prototyping and deployment |

| Hardware Compatible | Eliminates embedded hardware and software package incompatibility |

| GPU/NPU Access Ready | Supports passthrough for efficient hardware acceleration |

| Model Conversion & Optimization | Built-in AI model quantization and format conversion support |

| Optimized for CV & LLM Applications | Pre-optimized containers for computer vision and large language models |

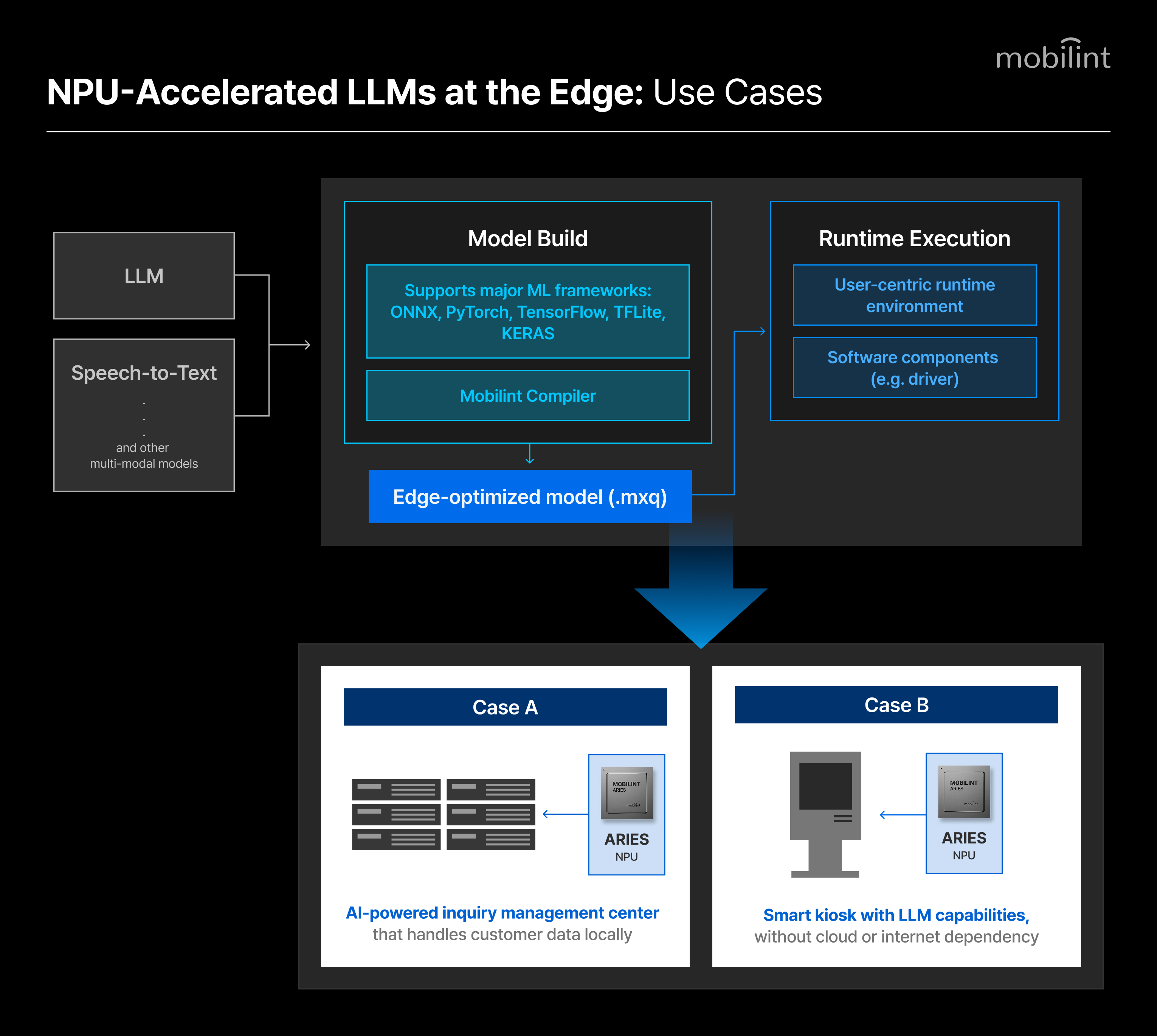

The Mobilint NPU LLM Inference Demo Container provides a fully integrated, ready-to-run environment for executing various large language models (LLMs) locally on Advantech’s edge AI devices embedded with Mobilint’s ARIES-powered MLA100 MXM AI accelerator module.

Overview

This edge LLM demo container features a user-friendly web-based GUI that allows users to select from a list of pre-compiled LLMs without any command-line configuration. It is designed for quick evaluation and demonstration of ARIES’s NPU acceleration in real-world LLM workloads.

All required runtime components and model binaries are preloaded to ensure a smooth out-of-the-box experience. Users can test different models and parameters from the GUI without editing configuration files or entering commands.

Key Features

- Browser-based GUI – Model selection and inference execution from a single dashboard

- Pre-compiled model set – Includes INT8-quantized LLMs

- Optimized Runtime Library – Hardware-accelerated inference for ARIES NPUs and Python and C++ backend integration for extended development

Mobilint NPU – LLM Performance Report

All LLM metrics are measured using GenAI-Perf by NVIDIA. The number of input tokens and output tokens were 240 and 10 respectively.

| Model | Time To First Token (ms) | Output Token Throughput Per User (tokens/sec/user) |

|---|---|---|

| c4ai-command-r7b-12-2024 | 4,667.31 | 4.58 |

| EXAONE-3.5-2.4B-Instruct | 963.86 | 14.23 |

| EXAONE-4.0-1.2B | 329.37 | 31.62 |

| EXAONE-Deep-2.4B | 886.35 | 13.03 |

| HyperCLOVAX-SEED-Text-Instruct-1.5B | 435.50 | 22.46 |

| Llama-3.1-8B-Instruct | 4,430.71 | 5.81 |

| Llama-3.2-1B-Instruct | 430.56 | 30.73 |

| Llama-3.2-3B-Instruct | 1218.22 | 12.16 |

Environmental Prerequisites on Host OS

Hardware

The container is designed to demonstrate Mobilint NPU’s local LLM capabilities as embedded in AIR-310, Advantech’s edge AI hardware. Other compatible hosts include:

- Mobilint MLA100 Low Profile PCIe Card

Software

- Docker Engine ≥ 28.2.2

- Mobilint SDK modules

-

Pre-compiled Mobilint-compatible LLM binaries (.mxq)

-

Mobilint ARIES NPU Driver

- NOTE: To access the files and modules, please contact tech-support@mobilint.com.

- To verify device recognition, run the following command in the terminal:

ls /dev | grep aries0If the output includes aries0, the device is recognized by the system. - For Debian-based operating systems, verify driver installation by running:

dpkg -l | grep aries-driverIf the output contains information about aries-driver, the device driver is installed.

-

Container Information

Directory Structure

├── backend

│ └── src

└── frontend

├── app

│ └── components

└── public

└── fonts

Container Components

- Mobilint Runtime Library (latest stable release)

- Web-based GUI frontend (Next.js based)

- Python LLM server backend (socket.io based)

Quick Start Guide

Install Docker

** Please refer to Mobilint repository in Advantech Container Catalog Github for detailed Quick Start Guide & scripts.

Follow the official Docker installation guide.

After installation, add your user to the docker group by following the Linux post-installation steps.

Create Docker Network & Build Image

docker network create mblt_int

docker compose build

Run

docker compose up

Set Production Mode

This demo was originally designed for single-user demonstration purposes.

However, you can enable multi-user functionality by setting up the production environment variable.

To do this, copy backend/src/.env.example to backend/src/.env and make PRODUCTION="True".

In production mode, changing the model will not be applied immediately. Instead, the server will automatically load the requested model for each LLM request as needed.

Change list of models

You can change the list of LLMs by editing backend/src/models.txt. These change will be applied when server is restarted.

Change text prompts

You can change system prompts without any docker rebuild by editing backend/src/system.txt and backend/src/inter-prompt.txt. The changes will be applied when the conversation is reset.

Run on background

docker compose up -d

Shutdown background

docker compose down

- From the GUI, select a model from the list.

- Interact with the loaded LLM as needed.

- To troubleshoot unexpected errors, please contact tech-support@mobilint.com.

Advantech × Mobilint: About the Partnership

Advantech and Mobilint have partnered to bring advanced deep learning applications, including large language models (LLMs), multimodal AI, and advanced vision workloads, fully at the edge.

Advantech’s industrial edge hardware, integrating Mobilint’s NPU AI accelerators, provides high-throughput, low-latency inference without cloud dependency.

Preloaded and validated on Advantech systems, Mobilint’s NPU enables immediate deployment of optimized AI applications across industries - including manufacturing, smart infrastructure, robotics, healthcare, and autonomous systems.

License

Copyright © 2025 Mobilint, Inc. All rights reserved.

Provided “as is” without warranties.